Red tide detection using GF-1 WFV image based on deep learning method

-

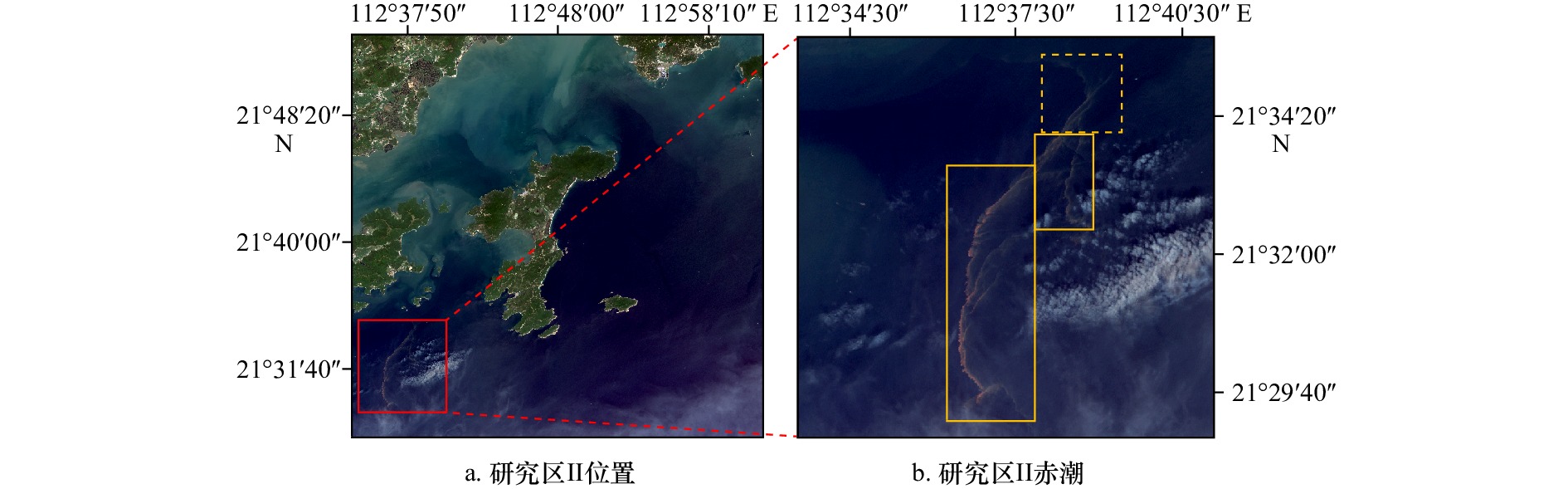

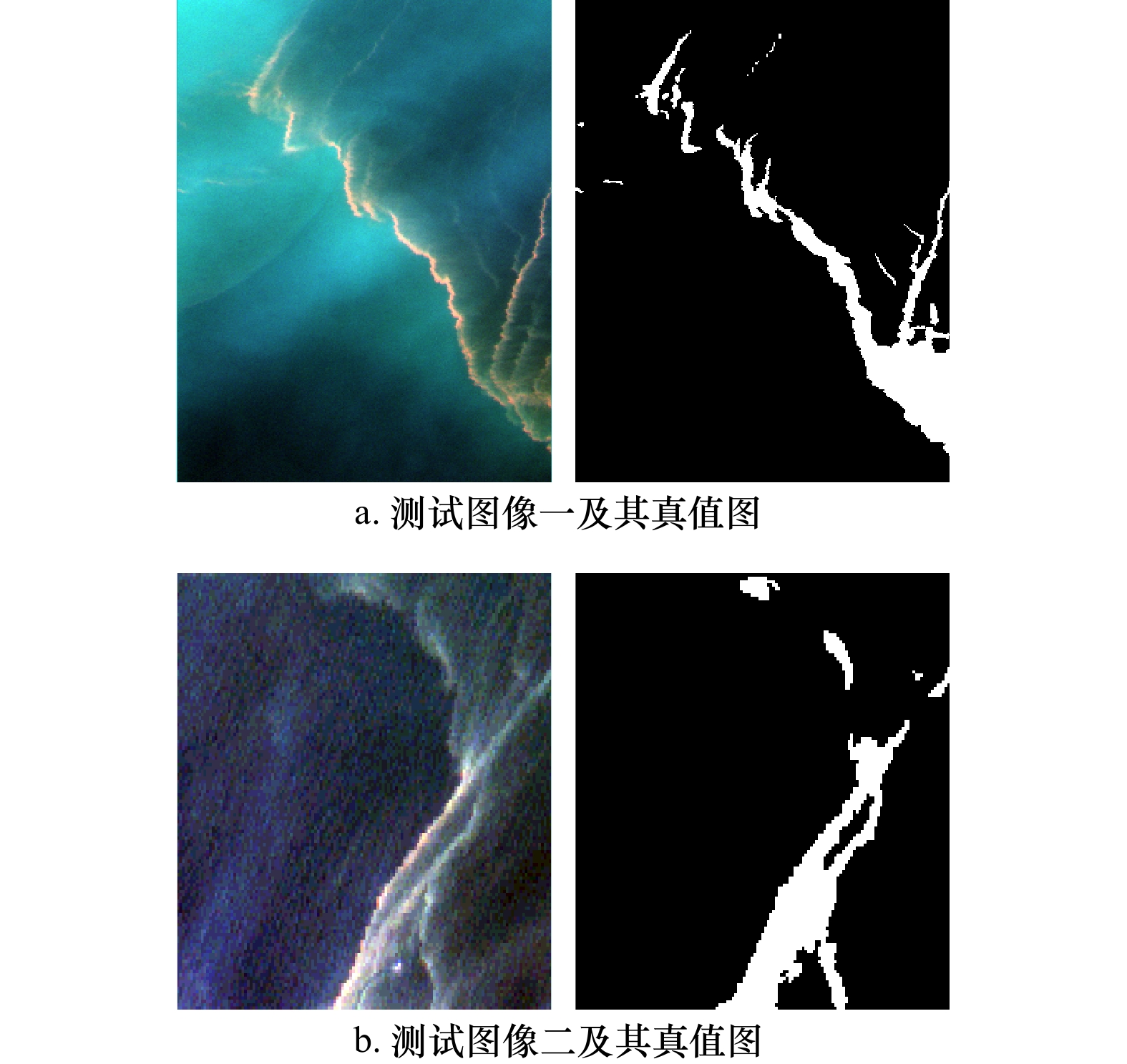

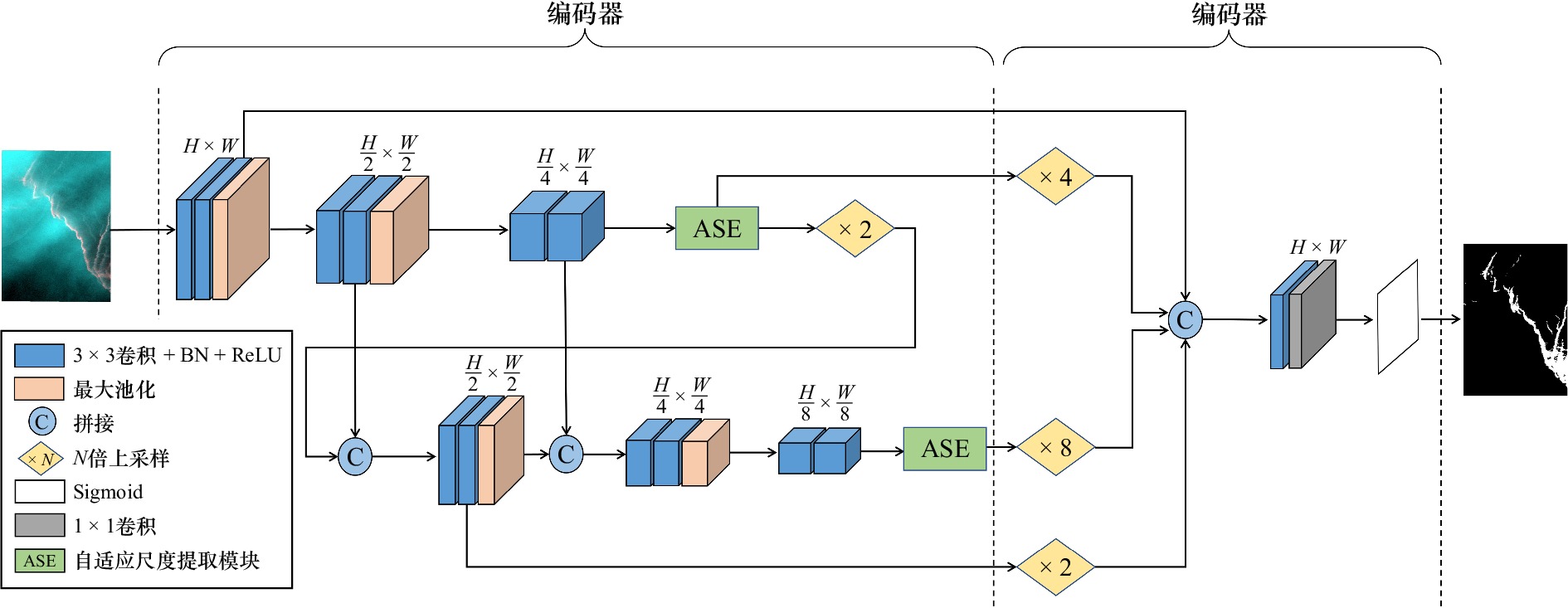

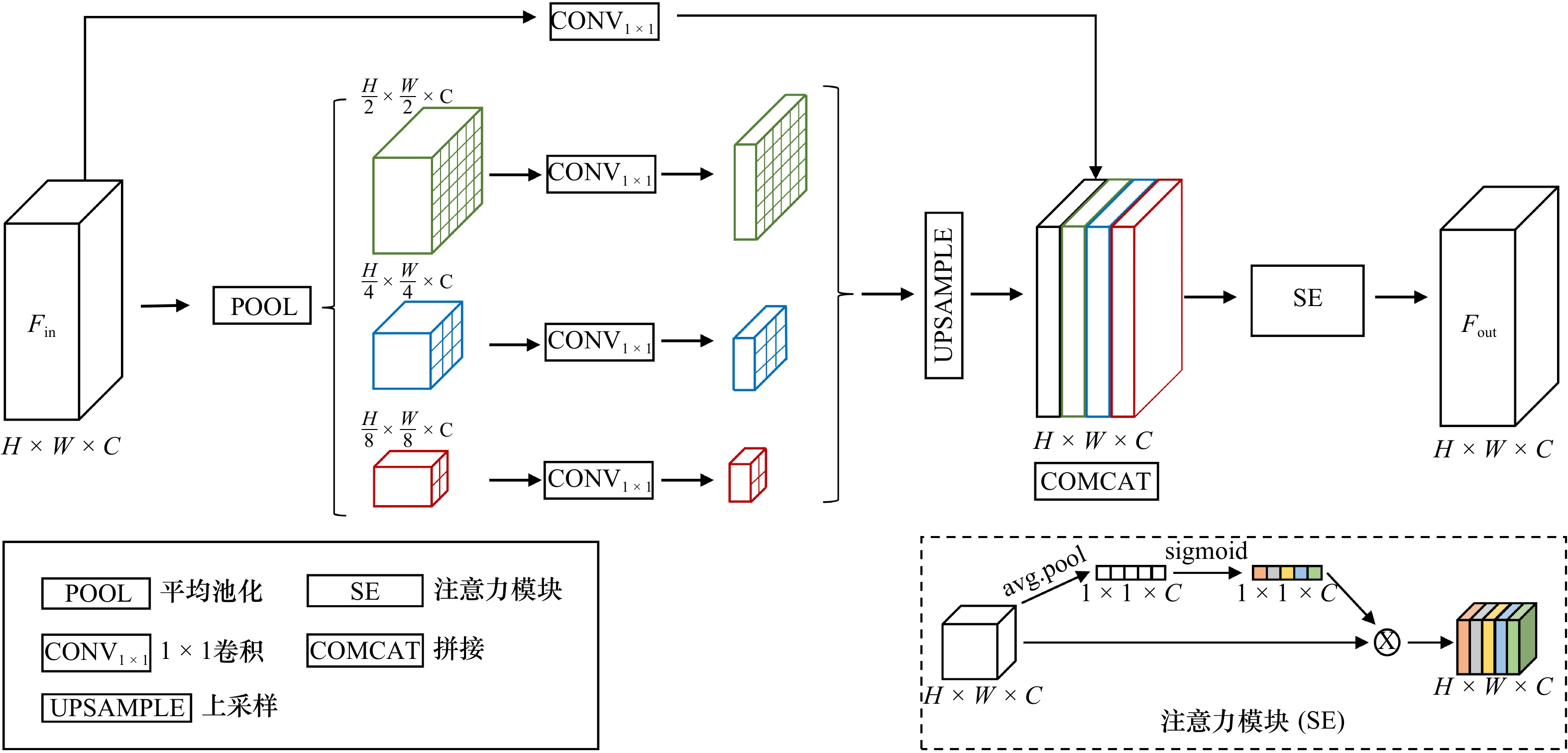

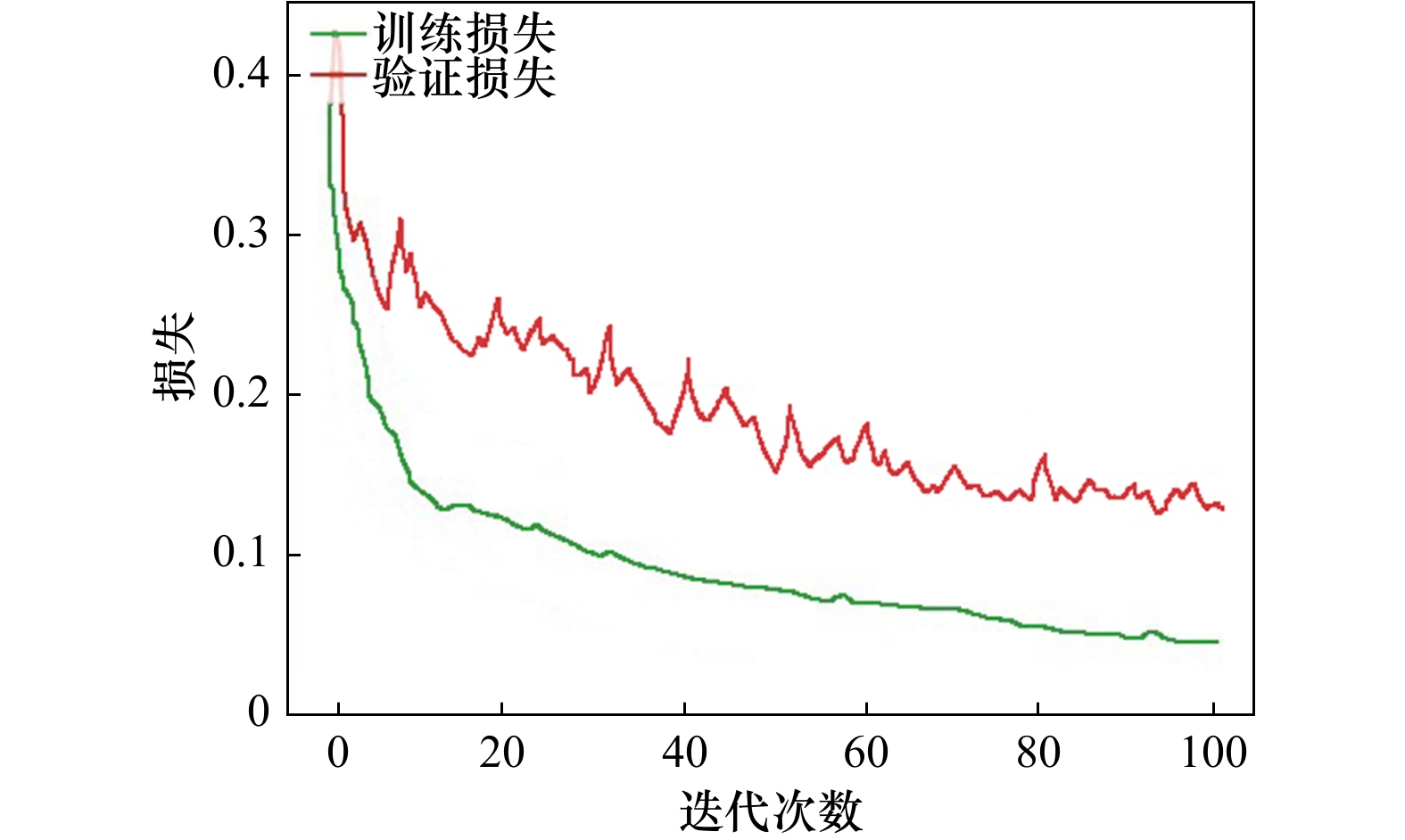

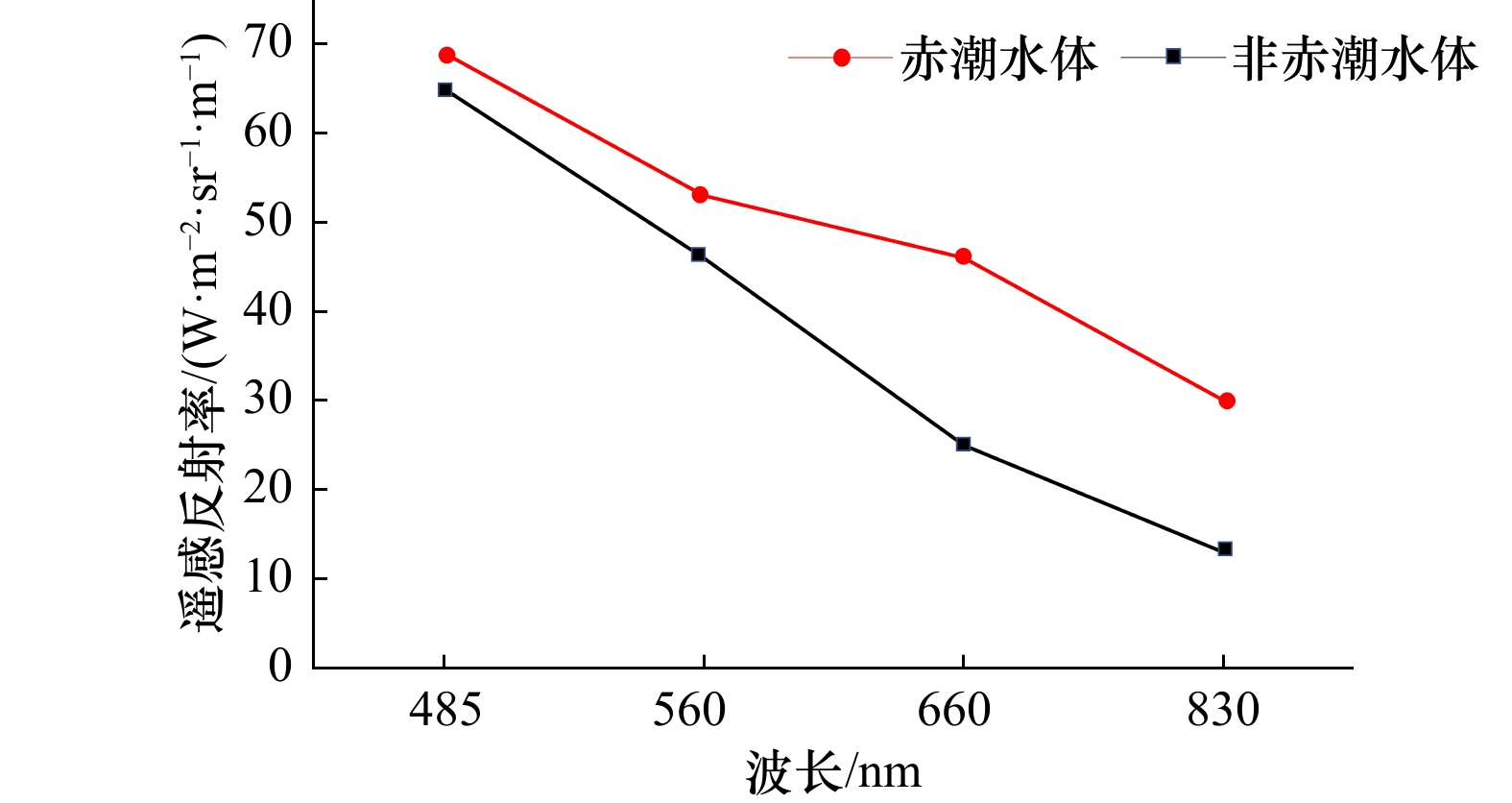

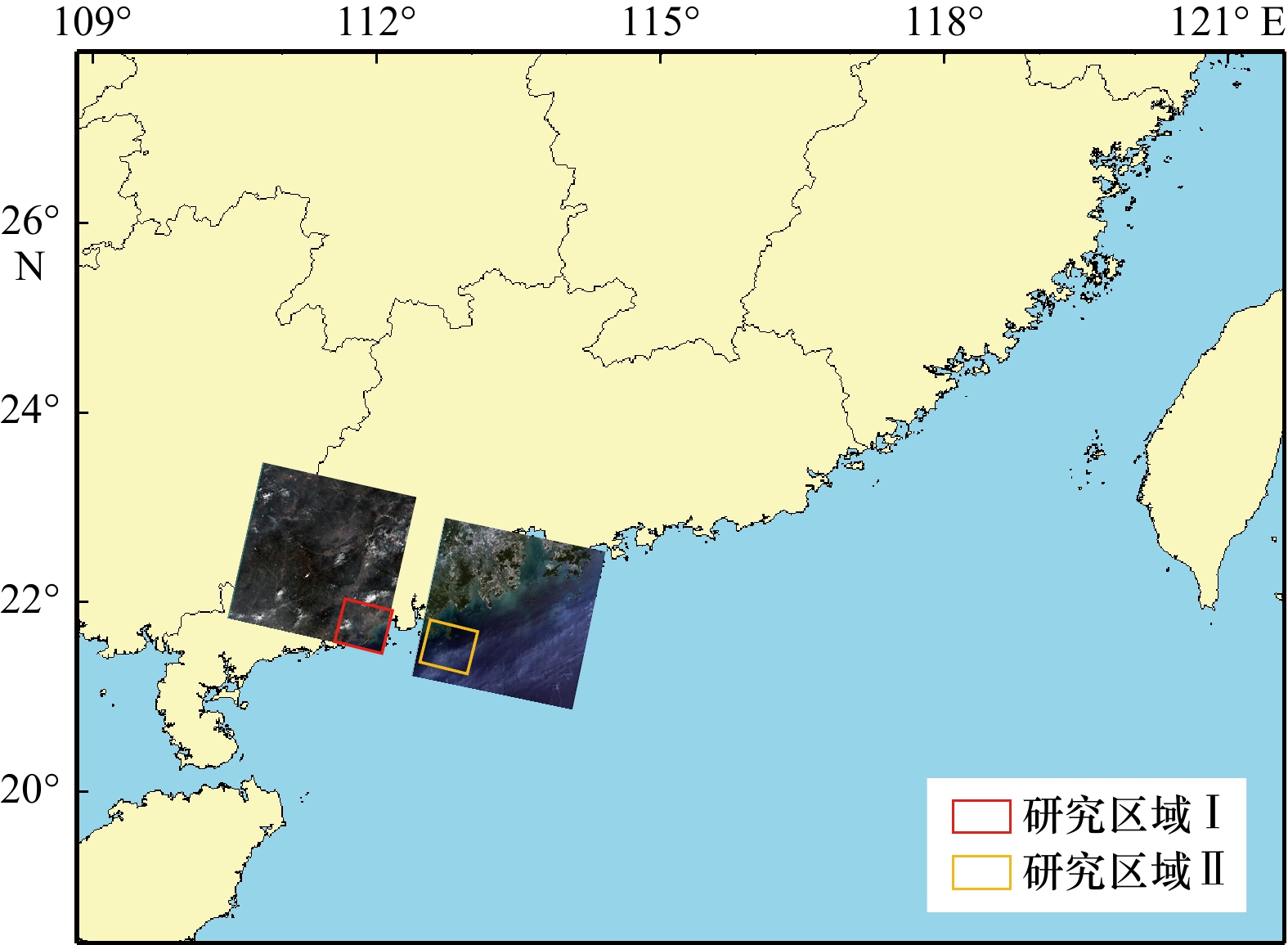

摘要: 赤潮是我国主要的海洋生态灾害,有效监测赤潮的发生和空间分布对于赤潮的防治具有重要意义。传统的赤潮监测以低空间分辨率的水色卫星为主,但是其对于频发的小规模赤潮存在监控盲区。GF-1卫星WFV影像具有空间分辨率高、成像幅宽大和重访周期短等优点,在小规模赤潮监测中表现出较大的潜力。然而,GF-1卫星WFV影像的光谱分辨率较低,波段少,传统面向水色卫星的赤潮探测方法无法应用于GF-1卫星WFV数据。而且赤潮具有形态多变、尺度不一的特点,难以精确提取。基于此,本文提出了一种面向GF-1卫星WFV影像的尺度自适应赤潮探测网络(SARTNet)。该网络采用双层主干结构以融合赤潮水体的形状特征与细节特征,并引入注意力机制挖掘不同尺度赤潮特征之间的相关性,提高网络对复杂分布赤潮的探测能力。实验结果表明,SARTNet赤潮探测精度优于现有方法,F1分数达到0.89以上,对不同尺度的赤潮漏提和误提较少,且受环境因素的影响较小。Abstract: Red tide is a major marine ecological disaster in China. Effectively monitoring the occurrence and spatial distribution of red tide is of great significance for their prevention and control. Traditional red tide monitoring is mainly conducted by watercolor satellites with low spatial resolution. However, there are monitoring blind areas for frequent small-scale red tides. GF-1 WFV remote sensing images, featuring high spatial resolution and a wide imaging range, can be used to monitor small-scale red tides. However, the traditional method for watercolor satellites cannot be used for GF-1 WFV satellite data as GF-1 WFV remote sensing images are characterized by low spectral resolution and few bands. And it is hard to extract the information about red tide as they differ in both shape and scale. Due to diverse shapes of the red tide distribution, this paper proposes a scale-adaptive red tide detection network (SARTNet) for GF-1 WFV sensing images. This network adopts a two-layer backbone structure to integrate the shape and detail features of red tide and introduces an attention mechanism to model the correlation between features of red tides at different scales, thereby improving its performance in detecting red tides that are complexly distributed. The experimental results show that the red tide detection performance of SARTNet is better than that of the existing methods, with an F1 score above 0.89; and it is less affected by environmental factors, with few missing and misstated pixels for red tide information at different scales.

-

Key words:

- red tide detection /

- GF-1 WFV /

- deep semantic segmentation /

- attention mechanism /

- multi-scale

-

表 1 GF-1 WFV影像参数

Tab. 1 GF-1 WFV image parameters

参数 多光谱宽幅相机 波长范围 0.45~0.52 μm 0.52~0.59 μm 0.63~0.69 μm 0.77~0.89 μm 空间分辨率 16 m 幅宽 800 km 重访周期 2 d 表 2 不同方法赤潮信息提取的定量结果

Tab. 2 Quantitative results of red tide information extraction by different methods

方法 精确率/% 召回率/% F1分数 参数量/M 研究区I GF1_RI 67.64 80.77 0.73 − U-Net 84.32 91.32 0.87 31.02 PSPNet 91.94 83.62 0.87 23.60 DeepLabv3+ 88.41 85.41 0.86 12.04 SARTNet 88.81 90.87 0.89 5.01 研究区II GF1_RI 79.48 60.40 0.68 − U-Net 78.80 80.47 0.79 31.02 PSPNet 84.01 83.91 0.83 23.60 DeepLabv3+ 87.01 81.82 0.84 12.04 SARTNet 91.09 87.29 0.89 5.01 注:加粗数据代表同列数据最大值。 表 3 ASE模块消融实验结果

Tab. 3 ASE module ablation experimental results

ASE 精确率/% 召回率/% F1分数 无 88.89 86.50 0.87 有 90.01 89.08 0.89 表 4 不同层次主干对赤潮信息提取的定量结果

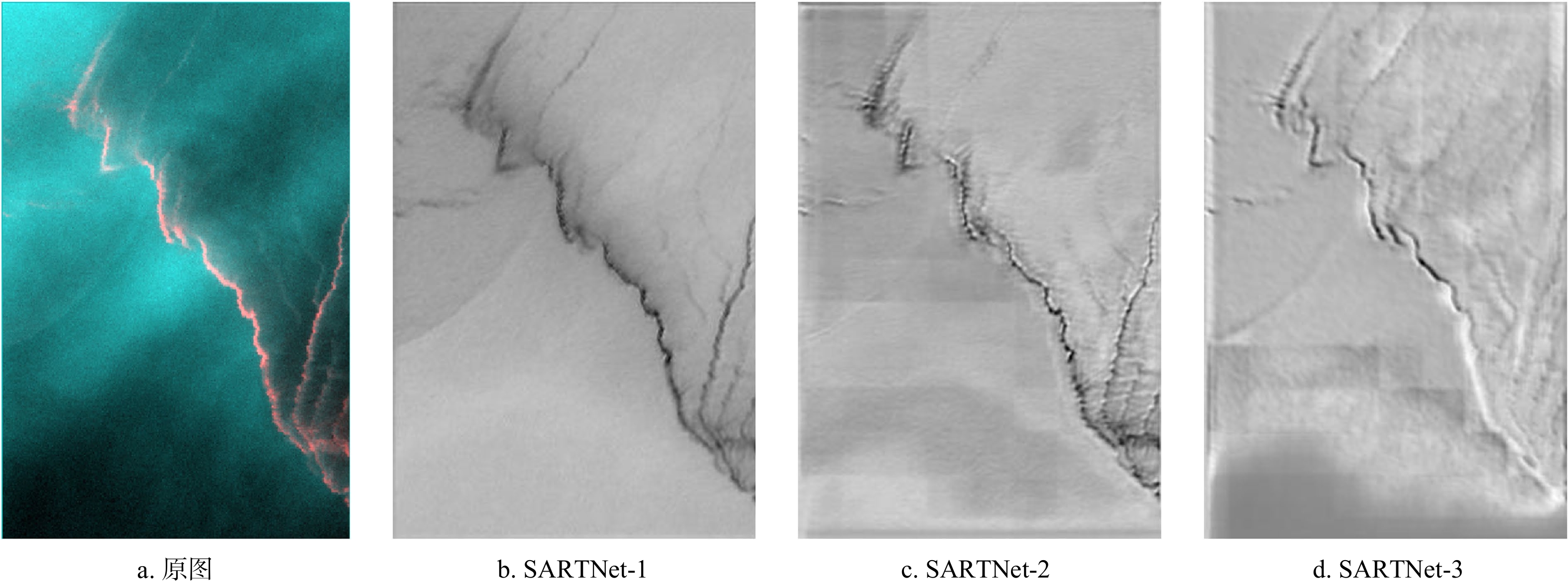

Tab. 4 Quantitative results of red tide information extraction at different levels

主干层次 精确率/% 召回率/% F1分数 SARTNet-1 91.79 85.44 0.88 SARTNet-2 90.01 89.08 0.89 SARTNet-3 88.49 88.50 0.88 表 5 输入不同数据的定量结果

Tab. 5 Quantitative results of experiments with different band settings

波段设置 精确率/% 召回率/% F1分数 4波段 88.81 90.87 0.89 4波段+NDVI 87.08 60.45 0.71 4波段+GF1_RI 87.54 91.65 0.89 4波段+GF1_RI+NDVI 87.17 67.91 0.76 表 6 模型适用性分析实验结果

Tab. 6 Experimental results of model applicability analysis

影像 精确率/% 召回率/% F1分数 GF-1 WFV3影像 91.37 86.70 0.89 GF-1 WFV4影像 89.16 87.05 0.88 -

[1] 伍玉梅, 王芮, 程田飞, 等. 基于卫星遥感的赤潮信息提取研究进展[J]. 渔业信息与战略, 2019, 34(3): 214−220. doi: 10.13233/j.cnki.fishis.2019.03.009Wu Yumei, Wang Rui, Cheng Tianfei, et al. Progress in retrieval of red tide from satellite data[J]. Fishery Information & Strategy, 2019, 34(3): 214−220. doi: 10.13233/j.cnki.fishis.2019.03.009 [2] 翟伟康, 许自舟, 张健. 河北省近岸海域赤潮灾害特征分析[J]. 海洋环境科学, 2016, 35(2): 243−246, 251. doi: 10.13634/j.cnki.mes.2016.02.015Zhai Weikang, Xu Zizhou, Zhang Jian. Analysis on characteristics of red tide disaster in Hebei coastal waters[J]. Marine Environmental Science, 2016, 35(2): 243−246, 251. doi: 10.13634/j.cnki.mes.2016.02.015 [3] 姜德娟, 王昆, 夏云. 渤海赤潮遥感监测方法比较研究[J]. 海洋环境科学, 2020, 39(3): 460−467. doi: 10.12111/j.mes20200321Jiang Dejuan, Wang Kun, Xia Yun. Comparative studies on remote sensing techniques for red tide monitoring in Bohai Sea[J]. Marine Environmental Science, 2020, 39(3): 460−467. doi: 10.12111/j.mes20200321 [4] 郝艳玲, 曹文熙, 崔廷伟, 等. 基于半分析算法的赤潮水体固有光学性质反演[J]. 海洋学报, 2011, 33(1): 52−65.Hao Yanling, Cao Wenxi, Cui Tingwei, et al. The retrieval of oceanic inherent optical properties based on semianalytical algorithm during the red ride[J]. Haiyang Xuebao, 2011, 33(1): 52−65. [5] 毛显谋, 黄韦艮. 多波段卫星遥感海洋赤潮水华的方法研究[J]. 应用生态学报, 2003, 14(7): 1200−1202. doi: 10.3321/j.issn:1001-9332.2003.07.037Mao Xianmou, Huang Weigen. Algorithms of multiband remote sensing for coastal red tide waters[J]. Chinese Journal of Applied Ecology, 2003, 14(7): 1200−1202. doi: 10.3321/j.issn:1001-9332.2003.07.037 [6] 王其茂, 马超飞, 唐军武, 等. EOS/MODIS遥感资料探测海洋赤潮信息方法[J]. 遥感技术与应用, 2006, 21(1): 6−10. doi: 10.3969/j.issn.1004-0323.2006.01.002Wang Qimao, Ma Chaofei, Tang Junwu, et al. A method for detecting red tide information using EOS/MODIS data[J]. Remote Sensing Technology and Application, 2006, 21(1): 6−10. doi: 10.3969/j.issn.1004-0323.2006.01.002 [7] Ahn Y H, Shanmugam P. Detecting the red tide algal blooms from satellite ocean color observations in optically complex Northeast-Asia Coastal waters[J]. Remote Sensing of Environment, 2006, 103(4): 419−437. doi: 10.1016/j.rse.2006.04.007 [8] Caballero I, Fernández R, Escalante O M, et al. New capabilities of Sentinel-2A/B satellites combined with in situ data for monitoring small harmful algal blooms in complex coastal waters[J]. Scientific Reports, 2020, 10(1): 8743. doi: 10.1038/s41598-020-65600-1 [9] Khalili M H, Hasanlou M. Harmful algal blooms monitoring using SENTINEL-2 satellite images[C]//Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences. Karaj, Iran: ISPRS, 2019: 609−613. [10] Xing Qianguo, Guo Ruihong, Wu Lingling, et al. High-resolution satellite observations of a new hazard of golden tides caused by floating sargassum in winter in the Yellow Sea[J]. IEEE Geoscience and Remote Sensing Letters, 2017, 14(10): 1815−1819. doi: 10.1109/LGRS.2017.2737079 [11] Yunus A P, Dou Jie, Sravanthi N. Remote sensing of chlorophyll-a as a measure of red tide in Tokyo Bay using hotspot analysis[J]. Remote Sensing Applications: Society and Environment, 2015, 2: 11−25. doi: 10.1016/j.rsase.2015.09.002 [12] Rahman A F, Aslan A. Detecting red tide using spectral shapes[C]//Proceedings of 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS). Beijing: IEEE, 2016: 5856−5859. [13] Liu Rongjie, Zhang Jie, Cui Binge, et al. Red tide detection based on high spatial resolution broad band satellite data: a case study of GF-1[J]. Journal of Coastal Research, 2019, 90(SI): 120−128. [14] Liu Rongjie, Xiao Yanfang, Ma Yi, et al. Red tide detection based on high spatial resolution broad band optical satellite data[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2022, 184: 131−147. doi: 10.1016/j.isprsjprs.2021.12.009 [15] 刘岳明, 杨晓梅, 王志华, 等. 基于深度学习RCF模型的三都澳筏式养殖区提取研究[J]. 海洋学报, 2019, 41(4): 119−130.Liu Yueming, Yang Xiaomei, Wang Zhihua, et al. Extracting raft aquaculture areas in Sanduao from high-resolution remote sensing images using RCF[J]. Haiyang Xuebao, 2019, 41(4): 119−130. [16] 崔艳荣, 邹斌, 韩震, 等. 卷积神经网络在卫星遥感海冰图像分类中的应用探究——以渤海海冰为例[J]. 海洋学报, 2020, 42(9): 100−109.Cui Yanrong, Zou Bin, Han Zhen, et al. Application of convolutional neural networks in satellite remote sensing sea ice image classification: a case study of sea ice in the Bohai Sea[J]. Haiyang Xuebao, 2020, 42(9): 100−109. [17] 徐知宇, 周艺, 王世新, 等. 面向GF-2遥感影像的U-Net城市绿地分类[J]. 中国图象图形学报, 2021, 26(3): 700−713. doi: 10.11834/jig.200052Xu Zhiyu, Zhou Yi, Wang Shixin, et al. U-Net for urban green space classification in Gaofen-2 remote sensing images[J]. Journal of Image and Graphics, 2021, 26(3): 700−713. doi: 10.11834/jig.200052 [18] 姜宗辰, 马毅, 江涛, 等. 基于深度置信网络(DBN)的赤潮高光谱遥感提取研究[J]. 海洋技术学报, 2019, 38(2): 1−7.Jiang Zongchen, Ma Yi, Jiang Tao, et al. Research on the extraction of red tide hyperspectral remote sensing based on the deep belief network (DBN)[J]. Journal of Ocean Technology, 2019, 38(2): 1−7. [19] 李敬虎, 邢前国, 郑向阳, 等. 基于深度学习的无人机影像夜光藻赤潮提取方法[J]. 计算机应用, 2022, 42(9): 2969−2974.Li Jinghu, Xing Qianguo, Zheng Xiangyang, et al. Noctiluca scintillans red tide extraction method from UAV images based on deep learning[J]. Journal of Computer Applications, 2022, 42(9): 2969−2974. [20] Lee H, Kwon H, Kim W. Generating hard examples for pixel-wise classification[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2021, 14: 9504−9517. doi: 10.1109/JSTARS.2021.3112924 [21] Zhao Xin, Liu Rongjie, Ma Yi, et al. Red tide detection method for HY-1D coastal zone imager based on U-net convolutional neural network[J]. Remote Sensing, 2022, 14(1): 88. [22] 刘锟, 付晶莹, 李飞. 高分一号卫星4种融合方法评价[J]. 遥感技术与应用, 2015, 30(5): 980−986.Liu Kun, Fu Jingying, Li Fei. Evaluation study of four fusion methods of GF-1 PAN and multi-spectral images[J]. Remote Sensing Technology and Application, 2015, 30(5): 980−986. [23] 王蕊, 王常颖, 李劲华. 基于数据挖掘的GF-1遥感影像绿潮自适应阈值分区智能检测方法研究[J]. 海洋学报, 2019, 41(4): 131−144.Wang Rui, Wang Changying, Li Jinhua. An intelligent divisional green tide detection of adaptive threshold for GF-1 image based on data mining[J]. Haiyang Xuebao, 2019, 41(4): 131−144. [24] 张海龙, 孙德勇, 李俊生, 等. 基于GF1-WFV和HJ-CCD数据的我国近海绿潮遥感监测算法研究[J]. 光学学报, 2016, 36(6): 0601004. doi: 10.3788/AOS201636.0601004Zhang Hailong, Sun Deyong, Li Junsheng, et al. Remote sensing algorithm for detecting green tide in China coastal waters based on GF1-WFV and HJ-CCD data[J]. Acta Optica Sinica, 2016, 36(6): 0601004. doi: 10.3788/AOS201636.0601004 [25] 程益锋, 黄文骞, 吴迪, 等. 基于高分一号卫星影像的珊瑚岛礁分类方法[J]. 海洋测绘, 2018, 38(6): 49−53. doi: 10.3969/j.issn.1671-3044.2018.06.012Cheng Yifeng, Huang Wenqian, Wu Di, et al. Coral reefs classification methods based on GF-1 satellite image[J]. Hydrographic Surveying and Charting, 2018, 38(6): 49−53. doi: 10.3969/j.issn.1671-3044.2018.06.012 [26] 邝辉宇, 吴俊君. 基于深度学习的图像语义分割技术研究综述[J]. 计算机工程与应用, 2019, 55(19): 12−21. doi: 10.3778/j.issn.1002-8331.1905-0325Kuang Huiyu, Wu Junjun. Survey of image semantic segmentation based on deep learning[J]. Computer Engineering and Applications, 2019, 55(19): 12−21. doi: 10.3778/j.issn.1002-8331.1905-0325 [27] Li Hanchao, Xiong Pengfei, Fan Haoqiang, et al. DFANet: deep feature aggregation for real-time semantic segmentation[C]//Proceedings of 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach: IEEE, 2019: 9522−9531. [28] Newell A, Yang Kaiyu, Deng Jia. Stacked hourglass networks for human pose estimation[C]//Proceedings of the 14th European Conference on Computer Vision. Amsterdam: Springer, 2016: 483−499. [29] Mao Xiaojiao, Shen Chunhua, Yang Yubin. Image restoration using very deep convolutional encoder-decoder networks with symmetric skip connections[C]//Proceedings of the 30th International Conference on Neural Information Processing Systems. Barcelona: Curran Associates Inc. , 2016: 2810−2818. [30] Zhou Feng, Hu Yong, Shen Xukun. Scale-aware spatial pyramid pooling with both encoder-mask and scale-attention for semantic segmentation[J]. Neurocomputing, 2020, 383: 174−182. doi: 10.1016/j.neucom.2019.11.042 [31] Zhao Hengshuang, Shi Jianping, Qi Xiaojuan, et al. Pyramid scene parsing network[C]//Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu: IEEE, 2017: 2881−2890. [32] Hu Jie, Shen Li, Sun Gang. Squeeze-and-excitation networks[C]//Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City: IEEE, 2018: 7132−7141. [33] Ronneberger O, Fischer P, Brox T. U-Net: convolutional networks for biomedical image segmentation[C]//Proceedings of the 18th International Conference on Medical Image Computing and Computer-Assisted Intervention. Munich: Springer, 2015: 234−241. [34] Chen L C, Zhu Yukun, Papandreou G, et al. Encoder-decoder with atrous separable convolution for semantic image segmentation[C]//Proceedings of the European Conference on Computer Vision. Munich: Springer, 2018: 833−851. [35] Rouse J W Jr, Haas R H, Schell J A, et al. Monitoring vegetation systems in the Great Plains with ERTS[R]. College Station, TX, United States: NASA, 1974. -

下载:

下载: